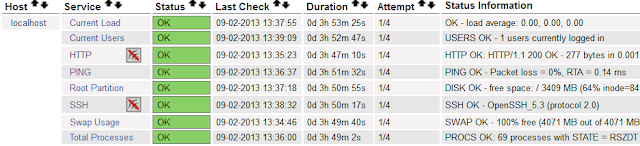

Nagios is an excellent monitoring tool. We can monitor servers, network devices using Nagios.

Besides many of the useful plugins at nagios exchange (

http://exchange.nagios.org) , we can also write our own plugins using shell scripts.

We can set up Nagios monitoring server by following

Setting up Nagios monitoring server, the default setting and configuration is sufficient if we are only monitoring a few servers. However as the number of monitored hosts and services increases, we will notice the check latencies.

This is because Nagios needs continuously updating some files on disk, when there are more items to monitor, there are also more disk I/O required, eventually I/O will become the bottle neck, it's slowing down the Nagios check.

To solve this problem, we need to improve IO performance or reduce IO requests, we can install Nagios on SSD disk, but it's not cost effective.

In an earlier post

using tmpfs to improve PostgreSQL performance, to boost the performance of PostgreSQL, we pointed stats_temp_directory to tmpfs.

Similarly, if some files are only needed when Nagios is running, we can move them to tmpfs, thus reduce IO requests.

In Nagios there are a few key files that affect disk I/O, they are:

1.

/usr/local/nagios/var/status.data, this status file stores the current status of all monitored services and hosts, it's being consistently updated as defined by status_update_interval, in my default nagios installation, status_file is updated every 10 seconds.

The contents of the status file are deleted every time Nagios restarts, so it's only useful when nagios is running.

[root@centos /usr/local/nagios/etc]# grep '^status' nagios.cfg

status_file=/usr/local/nagios/var/status.dat

status_update_interval=10

2.

/usr/local/nagios/var/objects.cache, this file is a cached copy of object definitions, and CGIs read this file the get the object definitions.

the file is recreated every time Nagios starts, So objects.cache doesn't need to be on non-volatile storage.

[root@centos /usr/local/nagios/etc]# grep objects.cache nagios.cfg

object_cache_file=/usr/local/nagios/var/objects.cache

3.

/usr/local/nagios/var/spool/checkresults, all the incoming check results are stored here, while Nagios is running, we will notice that files are being created and deleted constantly, so checkresults can also be moved to tmpfs

[root@centos /usr/local/nagios/etc]# grep checkresults nagios.cfg

check_result_path=/usr/local/nagios/var/spool/checkresults

[root@centos /usr/local/nagios/etc]#

[root@centos /usr/local/nagios/var/spool/checkresults]# ls

checkP2D5bM cn6i6Ld cn6i6Ld.ok

[root@centos /usr/local/nagios/var/spool/checkresults]# head -4 cn6i6Ld

### Active Check Result File ###

file_time=1385437541

### Nagios Service Check Result ###

[root@centos /usr/local/nagios/var/spool/checkresults]#

So we can move

status.data,

objects.cache and

checkresults to tmpfs, but before that we need to mount the file system first

[root@centos ~]# mkdir -p /mnt/nagvar

[root@centos ~]# mount -t tmpfs tmpfs /mnt/nagvar -o size=50m

[root@centos ~]# df -h /mnt/nagvar

Filesystem Size Used Avail Use% Mounted on

tmpfs 50M 0 50M 0% /mnt/nagvar

[root@centos ~]# mount | grep nagvar

tmpfs on /mnt/nagvar type tmpfs (rw,size=50m)

create directory for checkresults

[root@centos ~]# mkdir -p /mnt/nagvar/spool/checkresults

[root@centos ~]# chown -R nagios:nagios /mnt/nagvar

modify nagios.cfg

status_file=/mnt/nagvar/status.dat

object_cache_file=/mnt/nagvar/objects.cache

check_result_path=/mnt/nagvar/spool/checkresults

restart nagios so our changes will take effect

[root@centos ~]# service nagios restart

Running configuration check...done.

Stopping nagios: done.

Starting nagios: done.

we can see, nagios is using /mnt/nagvar

[root@centos ~]# tree /mnt/nagvar/

/mnt/nagvar/

├── objects.cache

├── spool

│ └── checkresults

│ ├── ca8JfZI

│ └── ca8JfZI.ok

└── status.dat

2 directories, 4 files

We can configure

/etc/fstab to mount

/mnt/nagvar everytime system reboots.

[root@centos ~]# echo <<EOF >> /etc/fstab

tmpfs /mnt/nagvar tmpfs defaults,size=50m 0 0

EOF

But the directory

/mnt/nagvar/spool/checkresults will be gone after

/mnt/nagvar is re-mounted, so we need to create this directory before starting up Nagios.

we can update

/etc/init.d/nagios, add this lines after the first line:

mkdir -p /mnt/nagvar/spool/checkresults

chown -R nagios:nagios /mnt/nagvar

[root@centos ~]# sed -i '1a\

mkdir -p /mnt/nagvar/spool/checkresults\

chown -R nagios:nagios /mnt/nagvar' /etc/init.d/nagios

Since we have moved the files to tmpfs, there is no disk I/O on these files, we can see great performance improvement of Nagios.

Reference:

http://assets.nagios.com/downloads/nagiosxi/docs/Utilizing_A_RAM_Disk_In_NagiosXI.pdf